Digital platforms often trick users into giving up their personal data or buying particular products. These “dark patterns” go against European legislation, but authorities are struggling to combat them.

It happens to everyone: you discover you’ve subscribed to some newsletter you’ve never heard of, or you knowingly subscribe because it’s the only way to access a specific website or app. Like this:

@darkpatterns On log in, you either accept marketing emails or cancel which logs you out. No way to opt out here. That’s not good UX @Gumtree #darkpattern pic.twitter.com/FzTU87olyZ

– Sándor Krisztián (@christiansandor) October 14, 2019

In both cases above, we have victims of “dark patterns”: those design choices pushing users to perform actions they would not otherwise perform. These can be graphical, textual or other interface elements which pressure the user in various ways to behave in a particular way. For example, it might be a pre-checked box underneath a confirmation button, as is the case with Eventbrite:

#darkpattern in Eventbrite’s last step when you register for tickets. ‘Go To My Tickets’ not only signs you up for email marketing but it’s already opt-in for you.

Sneaky + interfering the interface.

cc: @darkpatterns pic.twitter.com/yVn67BMwsR– Andrei Stroescu (@AndreiStroescu1) September 23, 2019

It can be even worse, however: try to find the option to cancel a Grammarly subscription in the image below.

Hey @Grammarly , kinda shitty UX trying to hide the button to cancel my subscription. #darkuxpattern #ux @darkpatterns pic.twitter.com/kClhGa6cdK

– Andreas Lee-Norman (@AndreasNorman) September 3, 2019

Below, a user wanted to unsubscribe from Yahoo Sports’ marketing messages. A large, colorful button says “No, cancel” (cancel what, exactly? The subscription, or the decision to cancel?). Meanwhile, the useful options are placed underneath, in faded grey.

@darkpatterns Trying to unsubscribe, so which option is colored, buttonized, front and center? Cancel, of course. pic.twitter.com/qQeklALMZW

– Brian Hershey (@BrianHershey) August 30, 2019

We could continue with plenty more examples, but this should be familiar experience to all readers. Should you want more examples, you can always follow the @darkpatterns Twitter account, created by UX (User Experience) expert Harry Brignull, who was also among the first to propose a taxonomy of dark patterns.

These methods are especially insidious because they rely (effectively, as various studies have shown) on cognitive biases, or all those “weaknesses” which lead us to make irrational choices: for example, when we want to get rid of a particular distraction (a banner), or we’re in a hurry to get something done (sign up to a social network). These user traps can be placed into two broad categories: purchasing decisions and providing personal data.

Dark patterns in purchasing decisions

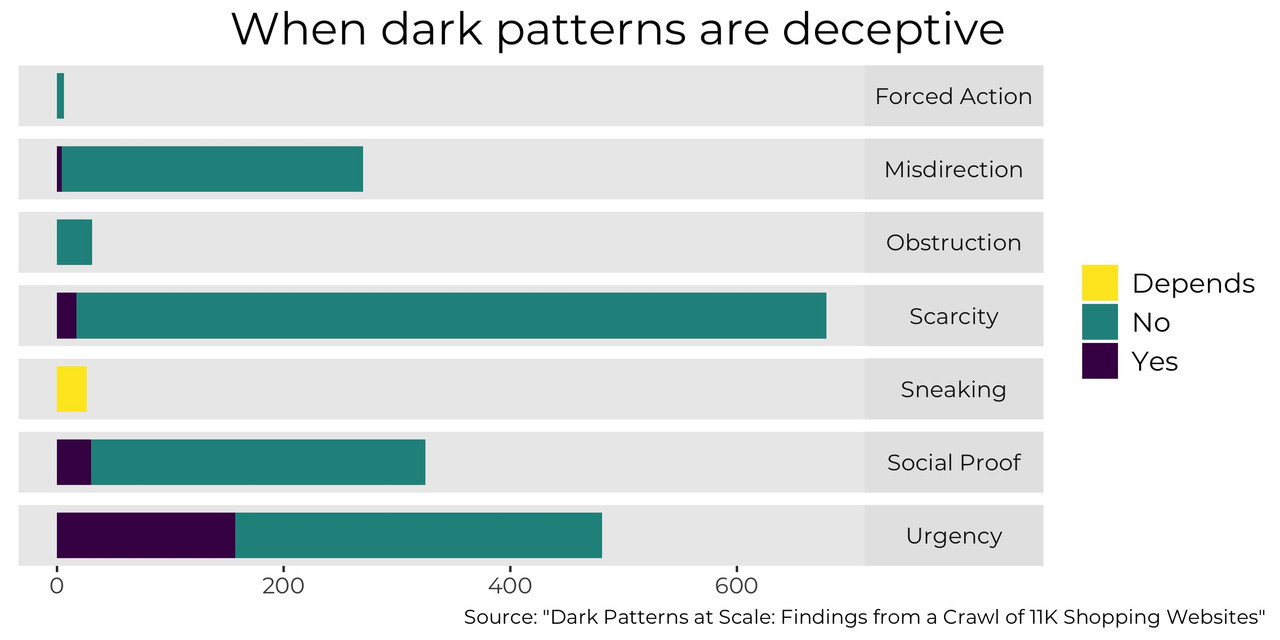

In the first case, dark patterns, in a more or less deceptive manner, lead the user to buy goods or services which they would not have otherwise bought. A study published in September 2019 by a research group led by Arunesh Mathur revealed 1,818 dark patterns (of which 208 were clearly deceptive) spread across 1,254 websites, from a sample size of around 11,000 sites, chosen from the most popular sites according to Alexa ranking.

The crawling software used to conduct the study is available on GitHub, so that other researchers can do further research. It simulates the behaviour of a normal user, choosing products on e-commerce sites, placing them in a basket, and stopping just before payment. Over this time, various potential influencing strategies are revealed, such as a count-down alerting users that an offer is about to end. This dark pattern belongs in the “urgency” category (in a taxonomy which partly reproduces that of Brignull): of the 393 timers encountered by the crawler, almost 40 percent only existed to pressure the user, even when the offer was not in fact about to end.

The “urgency” category is thus the one most concerned with deceptive messages. Next is “social proof”, or the kinds of messages alerting the user that a certain number of people are looking at the same product – encouraging them to make their decision before anyone else – or that another client has just bought it, encouraging them to do the same. Of the 335 cases in this category, thirty (almost nine percent) were deceptive, since the messages were randomly generated and only in order to induce the user into thinking that there was a lot of interest in the product in question.

The limit of this study is that it was only performed on textual examples, and only on English-language websites. This puts the emphasis on sites which are generally outside of Europe. However, looking at the addresses, we see that 143 sites can be traced to Britain, and 11 to Ireland. In order to study the European context, it would be useful to adapt the crawler to work on languages other than English.

Dark patterns and data protection

When it comes to dark patterns which push users into handing over personal data, there are two major types: subscription methods for services such as Facebook or Google, and the cookie banner.

According to a report by Forbrukerrådet, a Norwegian government agency for consumer protection, Facebook and Google (and, to a lesser extent, Microsoft) are intrusive “by default”. When subscribing to their services, a series of pre-checked options lead, more or less explicitly, to an agreement to providing personal data, which will then influence the user experience. Among elements criticised in the report, is the absence of phrases which would lead users to make more sensible choices with regard to their data, thus forcing behaviour in a particular direction. Counting on the impatience of the user, the decision is made almost immediately, and changing settings later is a long and complex process. We’re very far here from privacy by design and by default, explicitly defined in article 25 of the GDPR.

As for agreement to the use of third-party cookies (allowing the site to share users’ navigation data with other companies for various purposes, including the use of targeted advertising), there are also numerous violations. Below is an example involving Tumblr, where users are forced to untick more than 350 boxes if they want to prevent their personal data being shared with the platform’s business partners.

Some seriously unethical UX design from @tumblr – forcing anyone to untick 350+ boxes in order to prevent EACH INDIVIDUAL AD COMPANY from using our data. Taking the piss. #darkpatterns #unethicalUI #unethicaldesign pic.twitter.com/emjN4qG1Ox

– Depressing Vibes Sam (@Millstab) May 24, 2018

The limit of this study is that it was only performed on textual examples, and only on English-language websites. This puts the emphasis on sites which are generally outside of Europe. However, looking at the addresses, we see that 143 sites can be traced to Britain, and 11 to Ireland. In order to study the European context, it would be useful to adapt the crawler to work on languages other than English.

Dark patterns and data protection

When it comes to dark patterns which push users into handing over personal data, there are two major types: subscription methods for services such as Facebook or Google, and the cookie banner.

According to a report by Forbrukerrådet, a Norwegian government agency for consumer protection, Facebook and Google (and, to a lesser extent, Microsoft) are intrusive “by default”. When subscribing to their services, a series of pre-checked options lead, more or less explicitly, to an agreement to providing personal data, which will then influence the user experience. Among elements criticised in the report, is the absence of phrases which would lead users to make more sensible choices with regard to their data, thus forcing behaviour in a particular direction. Counting on the impatience of the user, the decision is made almost immediately, and changing settings later is a long and complex process. We’re very far here from privacy by design and by default, explicitly defined in article 25 of the GDPR.

As for agreement to the use of third-party cookies (allowing the site to share users’ navigation data with other companies for various purposes, including the use of targeted advertising), there are also numerous violations. Below is an example involving Tumblr, where users are forced to untick more than 350 boxes if they want to prevent their personal data being shared with the platform’s business partners.

BLOCK

This is an extreme case, but it is all too common for websites to propose anti-privacy options by default. Clearly, this goes against Consideration 32 of the GDPR, which states that “Silence, pre-ticked boxes or inactivity should not therefore constitute consent”. This is given further elaboration in Article 4, where “consent” is understood as “any freely given, specific, informed and unambiguous indication of the data subject’s wishes […]”.

The dark patterns in such cases are not just design elements, but a deliberate strategy to obtain users’ consent to provide personal data which is not necessarily essential for access to the services in question. A study involving 80,000 internet users in Germany demonstrated that the way in which information is presented to users (vocabulary, graphics, positioning) clearly influences their interaction with the cookie banner: when the website applies privacy settings by default and specific GDPR requirements, user consent to transmit data to a third party is given by less than 0.1 percent of users.

The laws are there, but who’s in charge of enforcing them?

“The principles of privacy by design and privacy by default are fundamental components of the GDPR. […] Dark patterns are a way for companies to circumvent these principles by ruthlessly nudging consumers to disregard their privacy and to provide more personal data than necessary. That is a problem we have to address.” (From an interview with Giovanni Buttarelli, European Data Protection Supervisor (who died on 20 August 2019), at the opening of a round table on dark patterns held on 27 April in Brussels).

Gianclaudio Malgieri is a legal expert and researcher at the University of Brussels, where he also works on the Panelfit project to develop guidelines on consent in the use of data in European research. As Malgieri explains, when it comes to e-commerce, “dark patterns is a new name for a phenomenon that has been around for quite a while. The law concerning the unfair influence on consumers has been around for more than fifteen years, while there was a more laissez-faire approach before that”.

The Directive on Unfair Terms in Consumer Contracts, approved by the European Union in 1993 (93/13/EEC), was the first, timid step towards the defence of consumer interests. “Directive 2005/29/CE finally confronted the theme of deceptive or aggressive persuasion,” Malgieri explains, “at a time when there was still no recognition of consumers’ cognitive biases. According to the directive, deceptive practices are those that present incomplete, inaccurate or exaggerated information about the product; the more aggressive practices push the user to perform actions which they would not otherwise perform, and are thus more controversial from the ethical, deontological perspective. Dark patterns are all of these things, even if so far no directive mentions them explicitly.”

One of the first European authorities to fine Facebook for this type of practice was the Italian Competition Authority, which, at the end of 2018, fined Facebook 10 million euro for unfair business practices. The two main arguments behind the ruling were: 1. In the registration phase, information on the use of personal data is “lacking in directness, clarity and completeness”; 2. The option to share user data is pre-checked, without explicit consent, and the ability to opt-out is only provided at a later time (and this choice inhibits the full enjoyment of the service). This second practice also occurs on sites other than Facebook, which allow users to login with other social media accounts (an option often offered by Google too): “it’s an option which clearly relies on users choosing the standard options, with claims concerning portability pushing the user to provide unnecessary data”.

It’s interesting to note that it’s an authority on competition, not on data protection, which is the first to tackle dark patterns. “Perhaps the guardians of privacy lack the right structure to deal with it,” continues Malgieri. “I have the impression that, all in all, at the legal level the European Union is equipped to deal with dark patterns, despite an absence of explicit references. It would be good if the European Data Protection Board (EDPB) would intervene — with interpretations geared towards fairness and examples relating to dark patterns — along with the European Commission, which should place dark pattern types in the black list of unfair business practices”.

This view is shared by Rossana Ducato, researcher with the Catholic University of Louvain in Belgium. According to Ducato, a path out of this impasse involves an integrated approach. “There are those who want an ad hoc legislative approach, as proposed in the United States with the DETOUR Act [presented in April, and closed for now], but in Europe there is no legal vacuum. However, there are still various problems, such as establishing an authority which could enforce the rules, as well as the problem of definitions and distinguishing the various types of dark patterns. This is why opening a broad, serious debate between all interested parties, and eventually drafting common guidelines, is likely to be the more elegant strategy. We don’t need an umpteenth directive: we need to work on the application of the laws and user awareness”.

One of the problems limiting the effectiveness of the laws is the lack of a common methodology for identifying dark patterns. For this reason, it’s worth looking at the work of Claude Castelluccia, director of research at the French Research Institute For Digital Sciences (INRIA). The aim of his research is the formalisation of a system to determine when there is manipulation of users, by employing machine learning techniques. According to the classification proposed by his research group, dark patterns which undermine the privacy of users fall into the category of execution attacks, or actions which make things so complicated that they lead the user to give up on opposing them.

The judgement which shed light on this mechanism was passed in January 2019 by CNIL (National Commission on Informatics and Liberty), the French authority for data protection. CNIL fined Google 50 million euro, for reasons similar to those for which Facebook was fined in Italy. Specifically, among other things, making it overly complicated for users to access essential information, such as the scope of data collection, the duration of its storage, and the type of personal data used for targeted advertising. Information was available on various pages, but required many clicks in order to be reached. CNIL also deals with dark patterns in a special issue of its Cahiers — an excellent compendium of what is known, to date, about the issue.